¶ Data Warehouse Policies 🚧

¶ Daily Loading to Snowflake Data Warehouse

This process is initiated at the start of each business day, upon the commencement of the Data Analyst's workday. The loading of data follows a meticulous step-by-step procedure, ensuring the seamless and timely integration of valuable information into our systems.

The computer used for the daily loading of data is the DTT Server located at the General Services Area. The data analyst can control the computer through Team Viewer. Extraction of data using personal computers during WFH days is impossible because the LMS data is only accessible through local network.

-

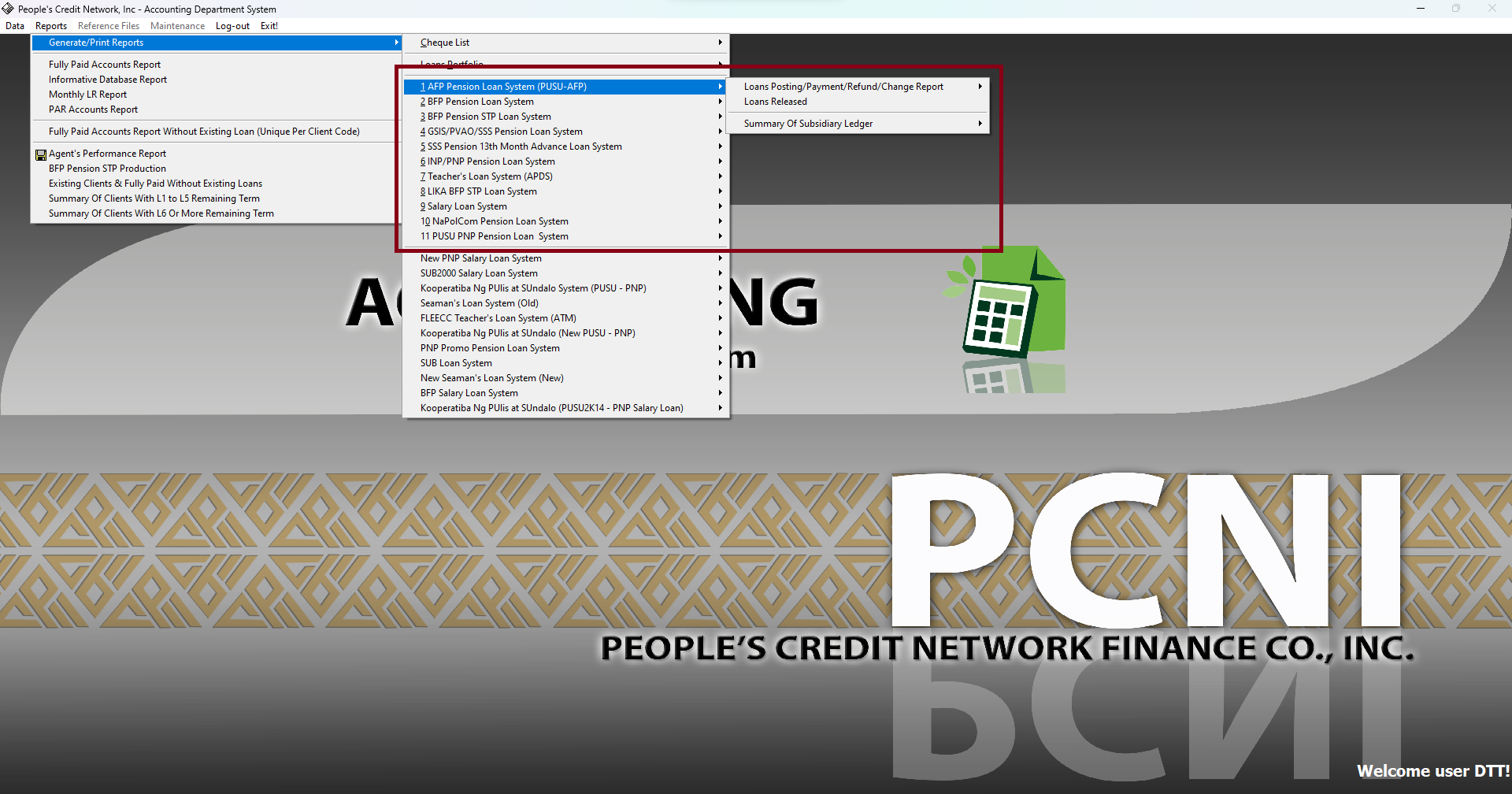

¶ Extract

- First step is the extraction of Releases and Collections for all products from LMS.

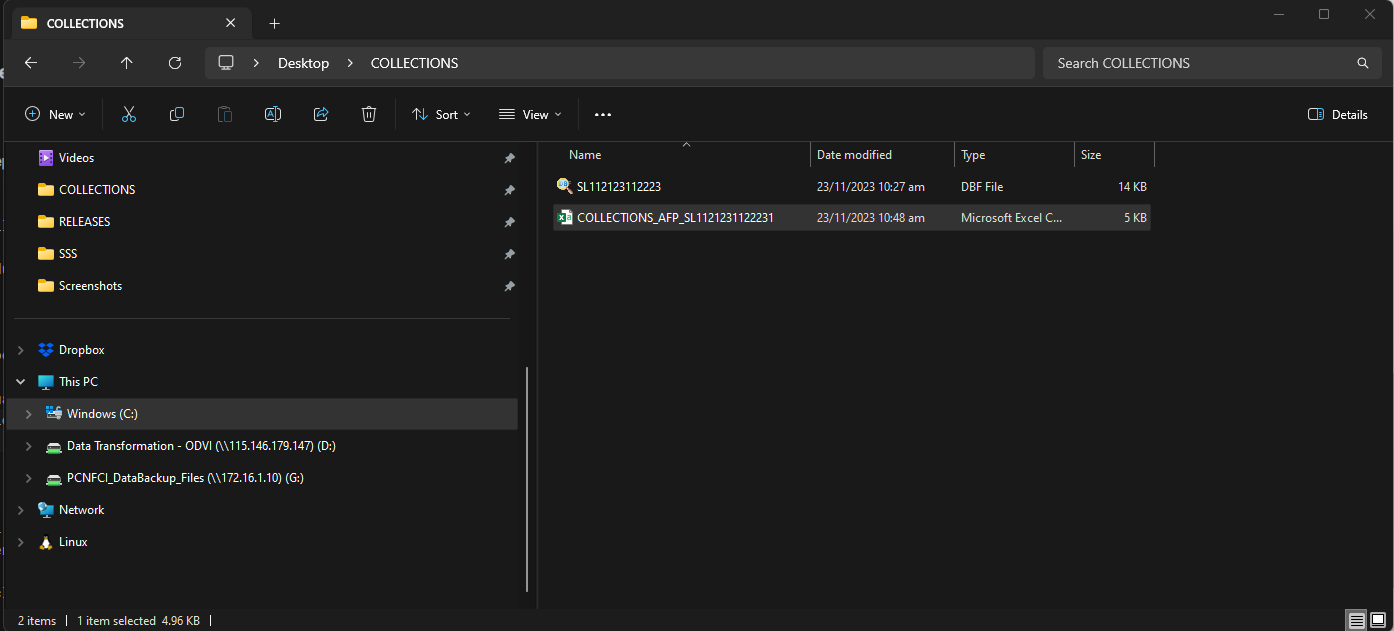

- Rename the files in every extraction made. Example: COLLECTIONS_PNPINP_SL112123112223, RELEASES_PNPINP_rel112123112223

Visit this link to know the DTT standard naming conventions for the products. You can access it using dtt@oakdriveventures.com.

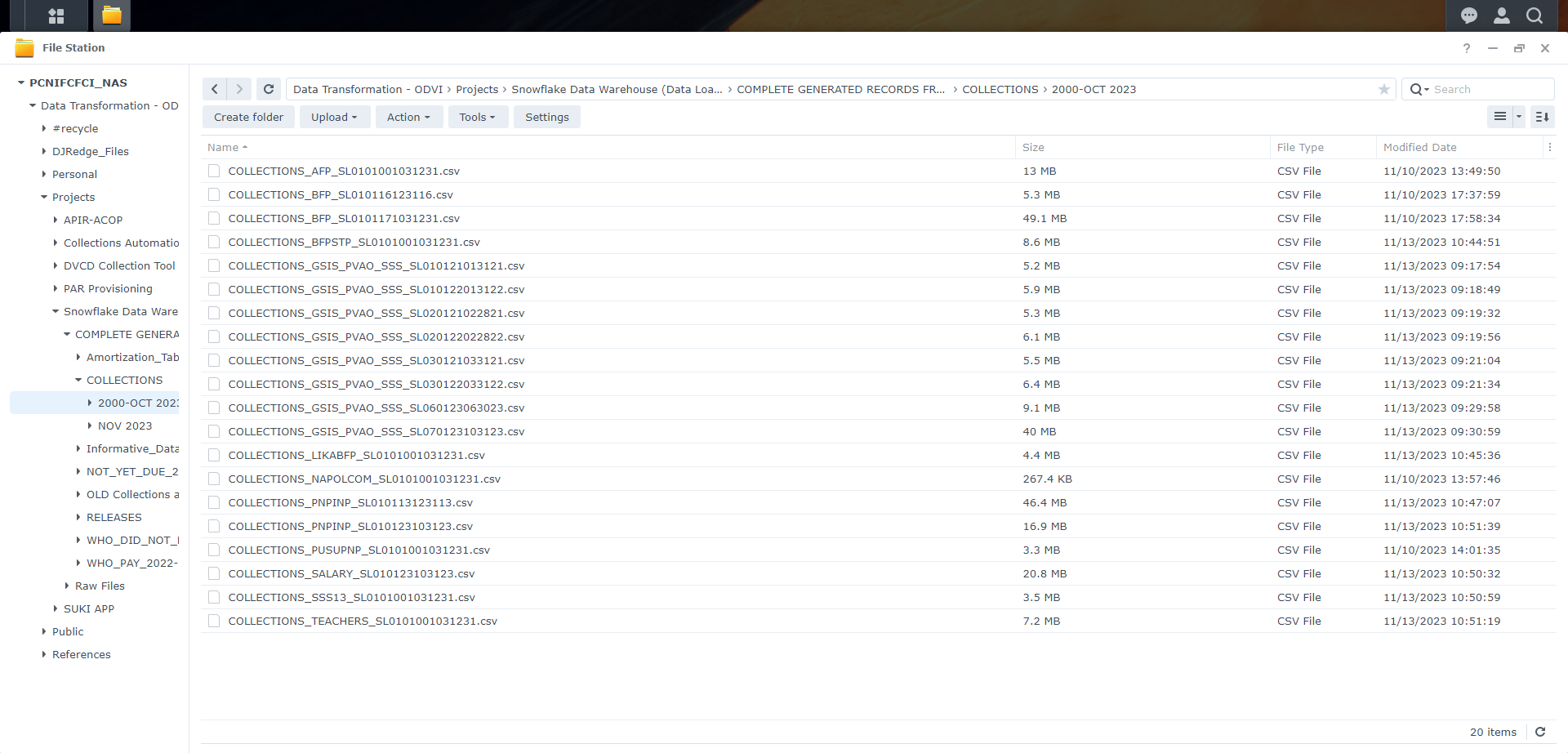

Second, The extracted files are grouped into two: Releases and Collections, saved in two different folders that can be found in the Desktop. Follow the folder structure inside these two folders which is by date extracted.

- First step is the extraction of Releases and Collections for all products from LMS.

-

¶ Transform

-

¶ Load

insert here the daily loading process including time and schedule. dapat ba nasa loob na lang to ng analytics policies? or hiwalay talaga

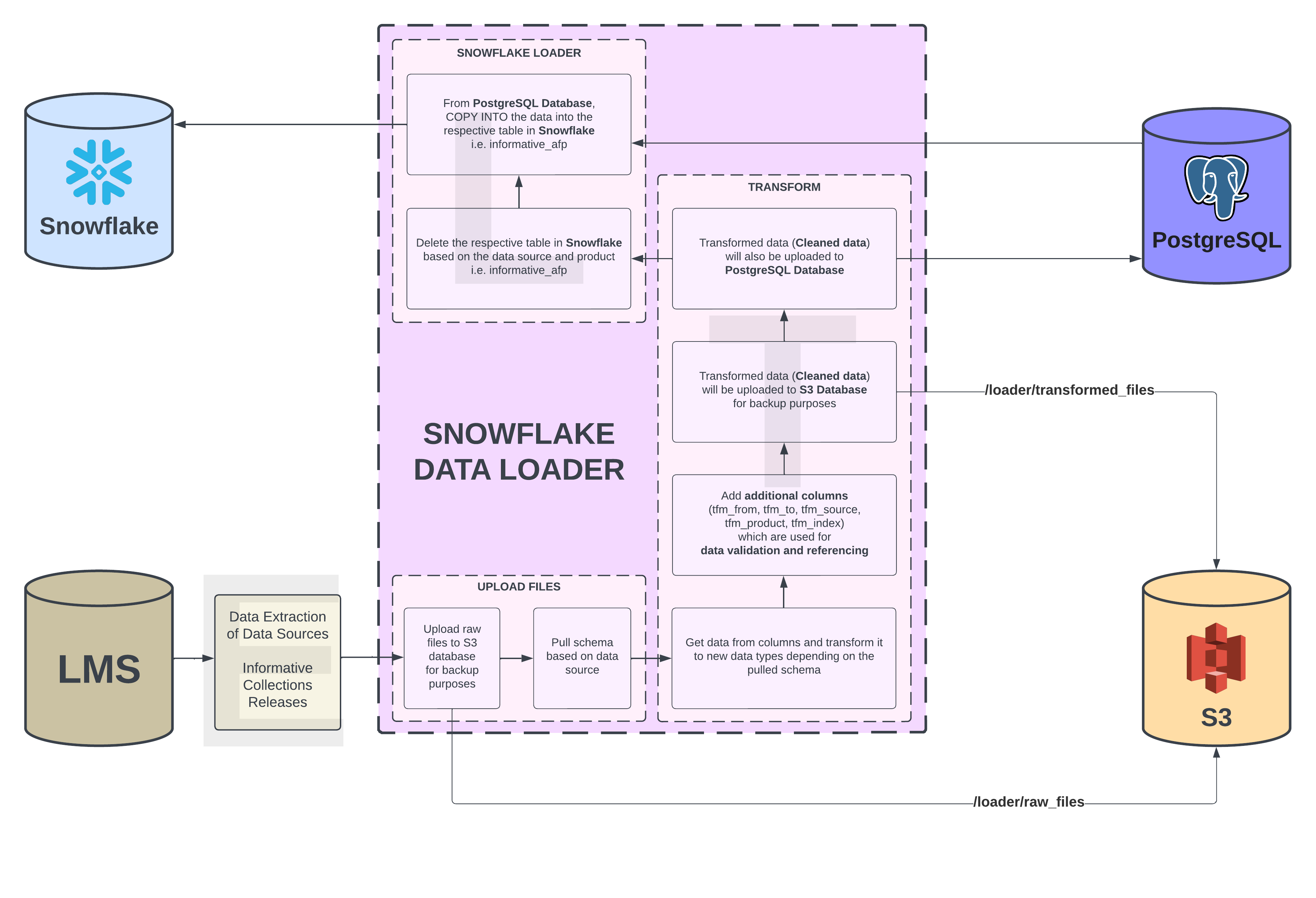

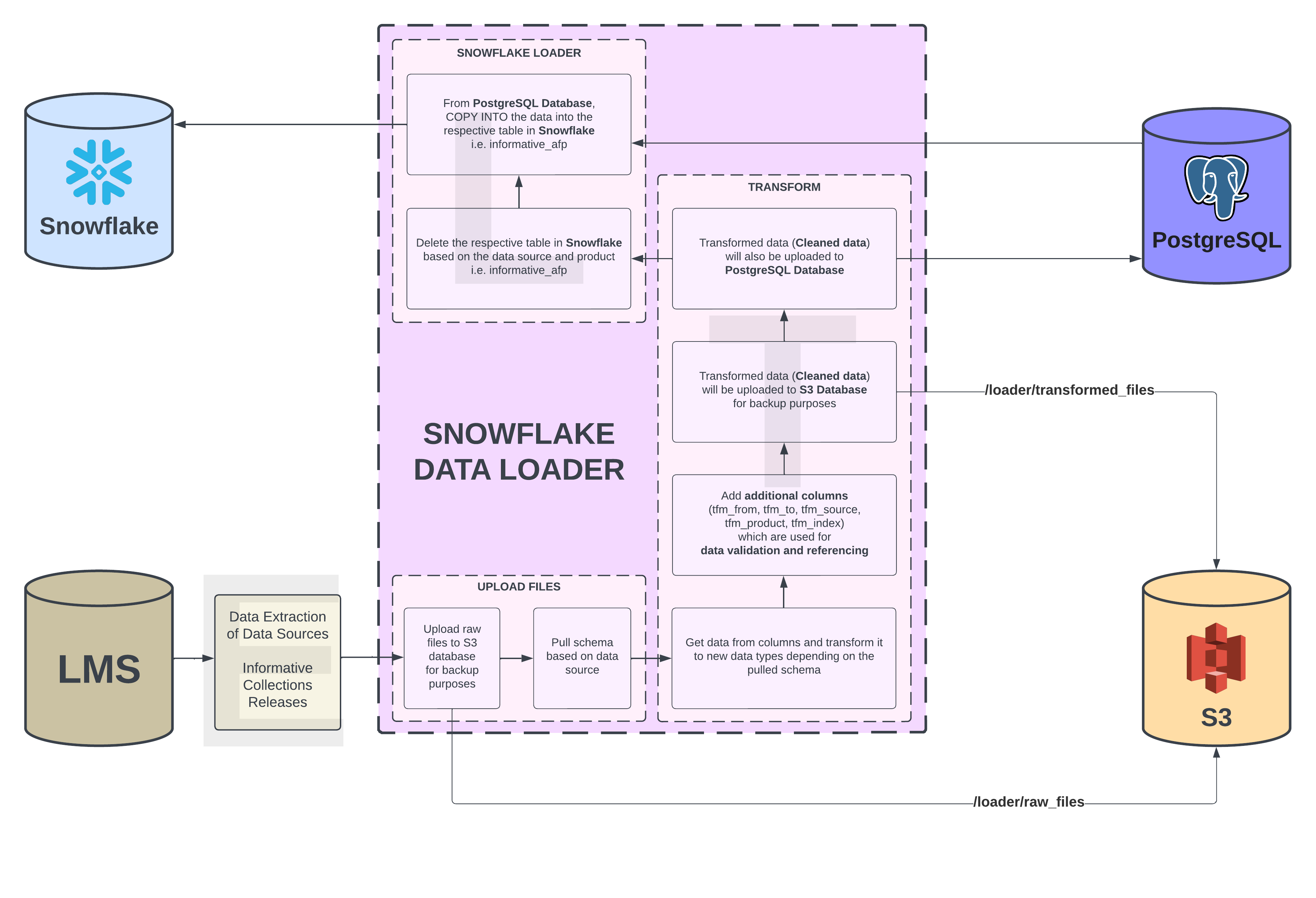

¶ Data warehouse infrastructure (copy from airbyte)🚧

¶ Data Storage and Management Guidelines🚧

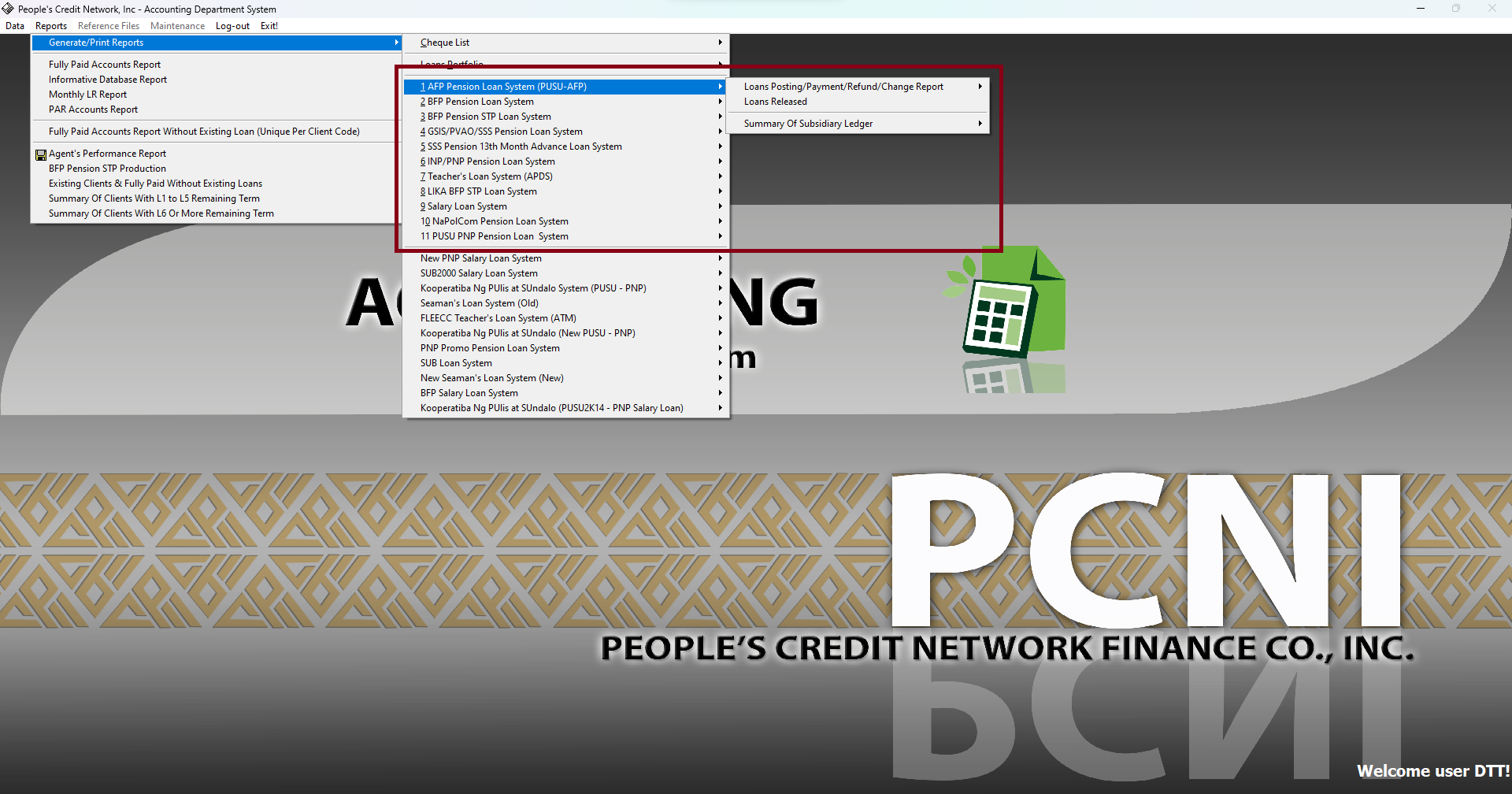

¶ Data Sources🚧

Informative

Releases

Collections

Who Did Not Pay

Who Pay

Not Yet Due

Amortization

Cancelled Loans

¶ Data Collection & Integration Process 🚧

Daily process for loading data (Step by Step Procedures)

- This process is initiated at the start of each business day, upon the commencement of the Data Analyst's workday. The loading of data follows a meticulous step-by-step procedure, ensuring the seamless and timely integration of valuable information into our systems.

1. Data Extraction from LMS, Per Product:

- Data extraction is performed from (LMS) on a daily basis, and is the first task done by the Data Analyst

Extraction is conducted separately for each product to maintain granularity and specificity.

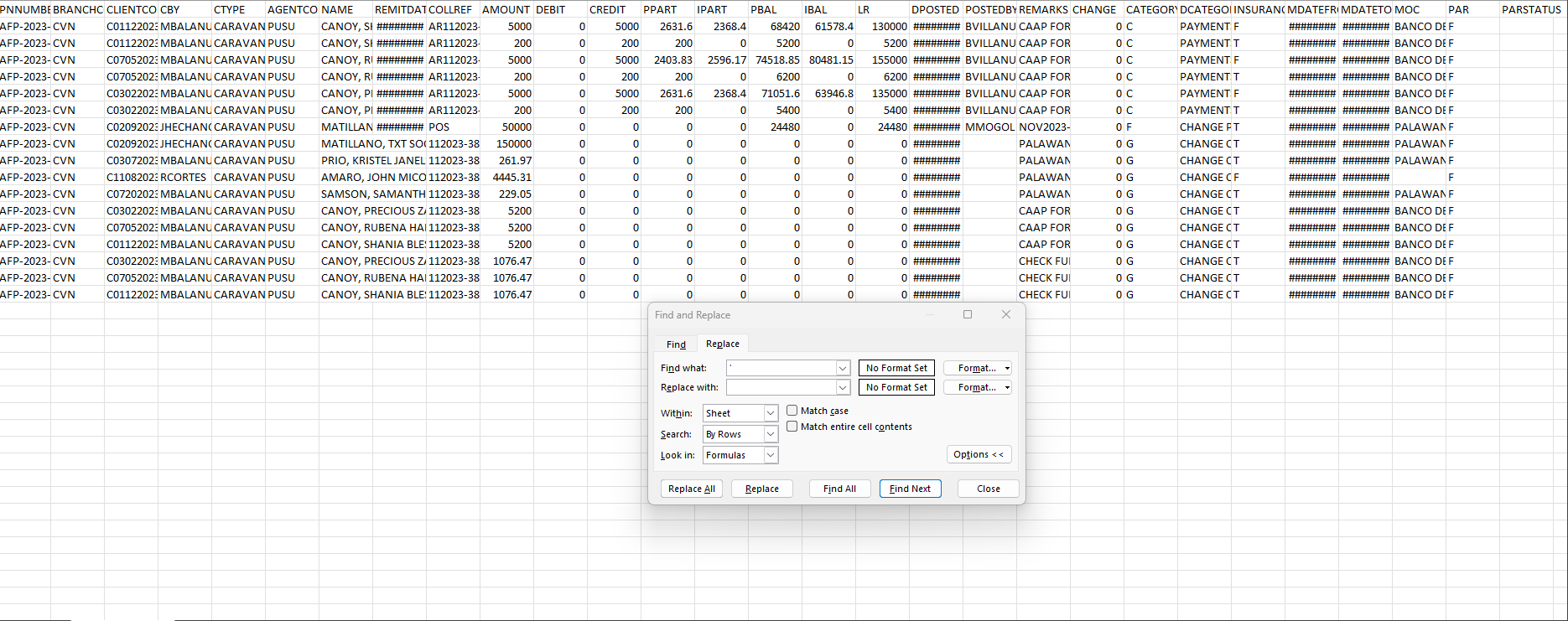

2. Error Checking:

- Extracted data undergoes a thorough error checking process to identify and rectify any inconsistencies or any added columns. Standards are applied to ensure data quality, accuracy, and uniformity.

Steps include, Converting into CSV, Changing file name to product for Uniformity, Checking for Errors and other Inconsistencies.

Checking for Errors and Inconsistencies inside file

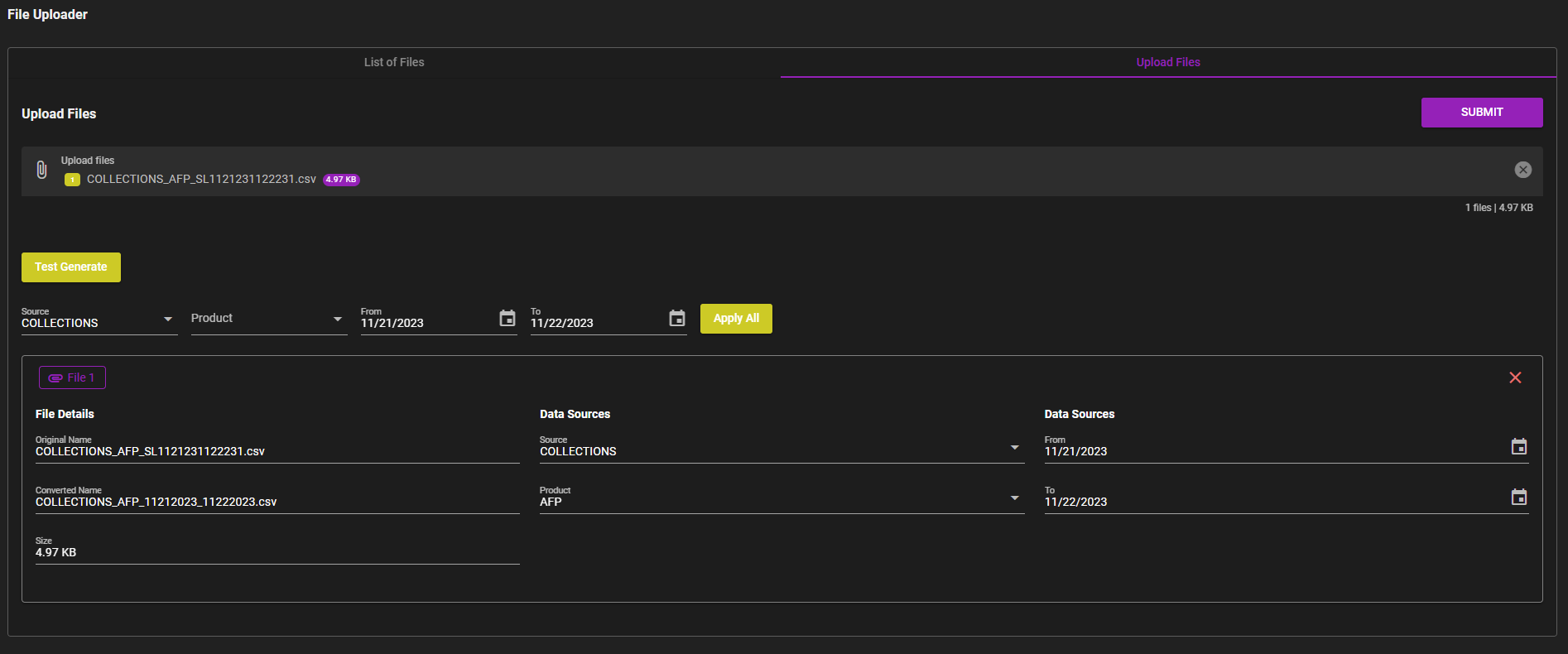

3. Data Upload into Data Loader:

- After checking data is uploaded into a Data Loader tool for further processing. Segrating Files into Seperate Products. The Data Loader automates the loading process, enhancing efficiency and reducing the risk of manual errors.

This step facilitates the seamless transfer of cleaned data to the next stage of the pipeline.

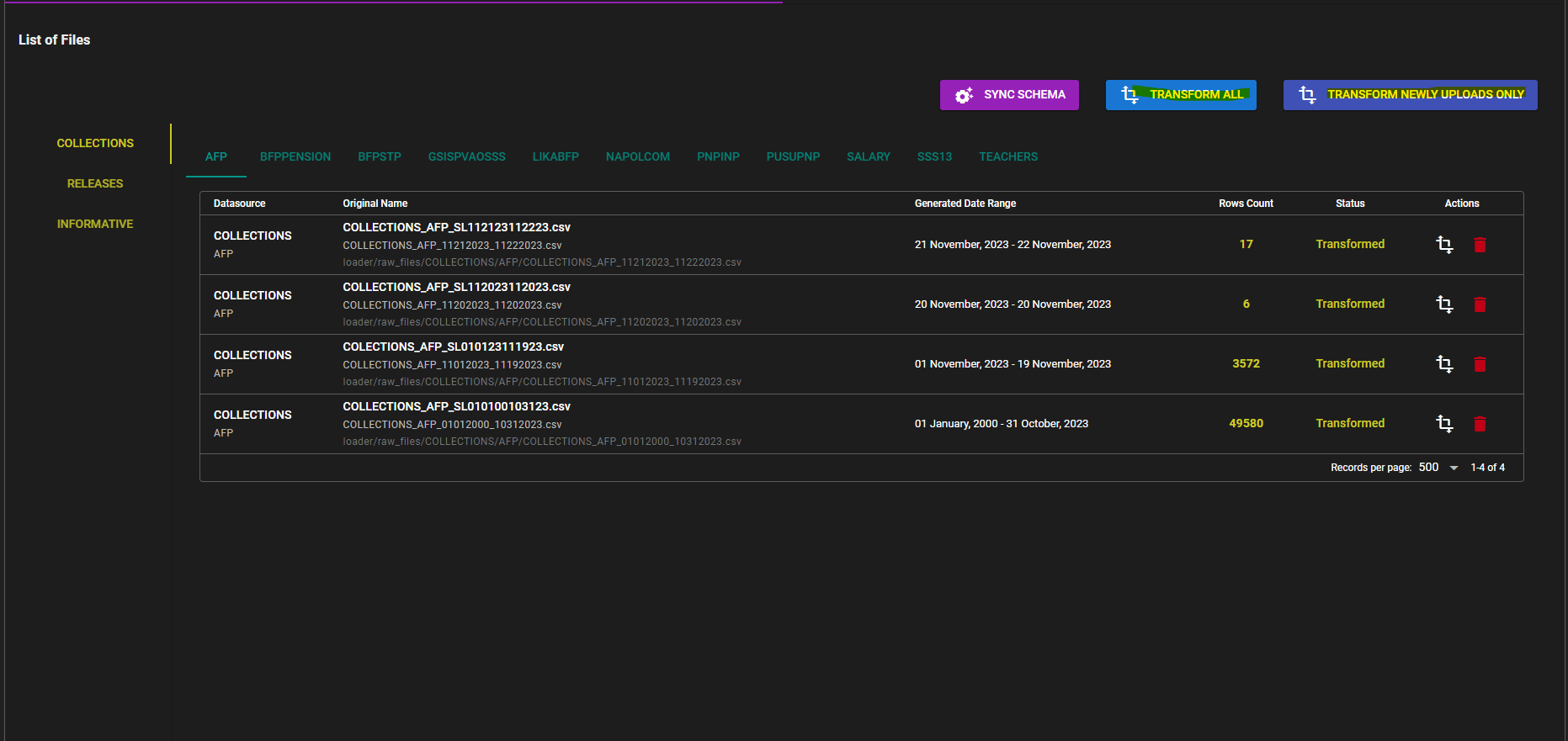

4. Data Cleaning and Transformation:

- The uploaded data undergoes Data Cleaning and Transformation processes to structure and organize it according to Predefined Schema.

Transformation ensures that the data is in a format compatible with the target data model.

This step prepares the data for integration into the Snowflake data warehouse.

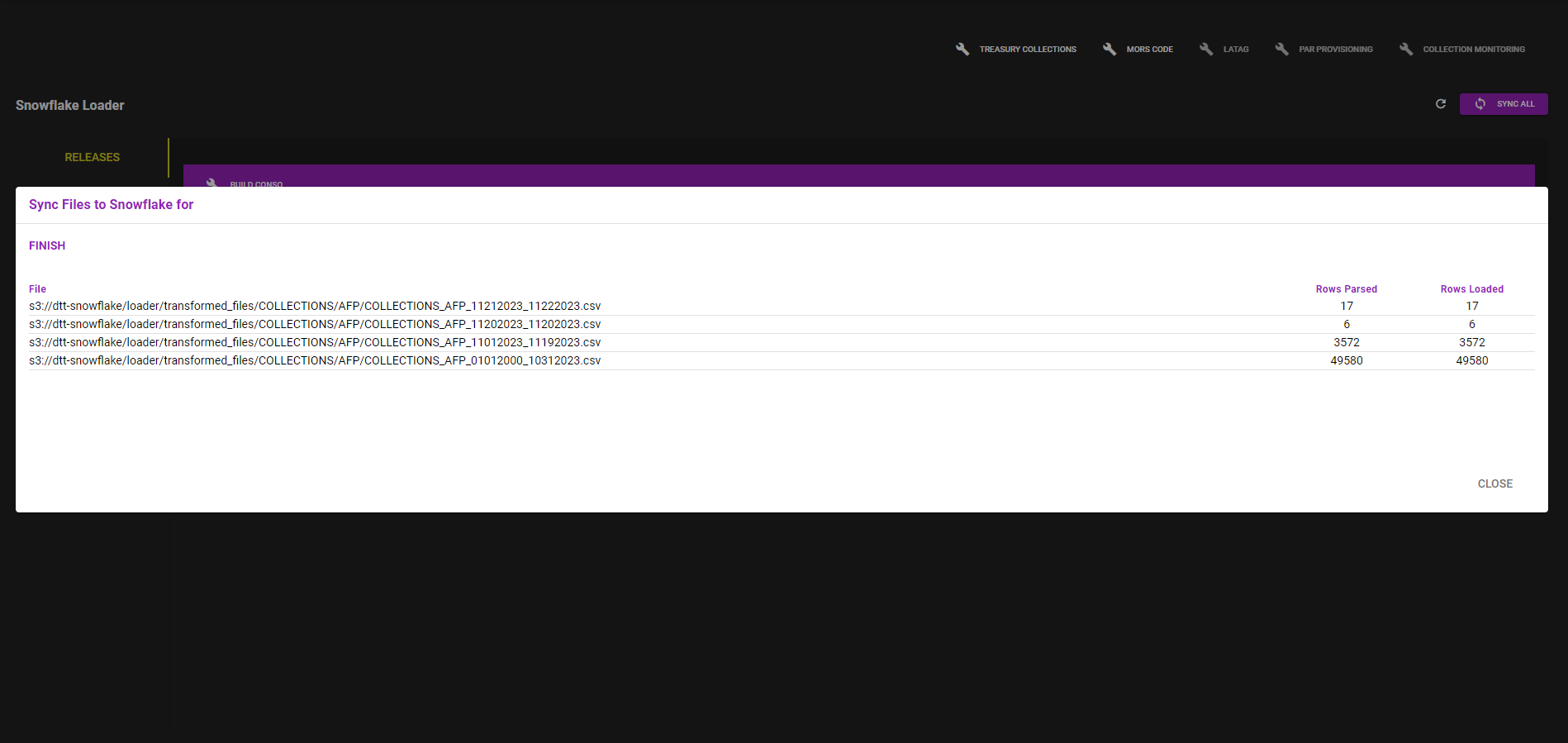

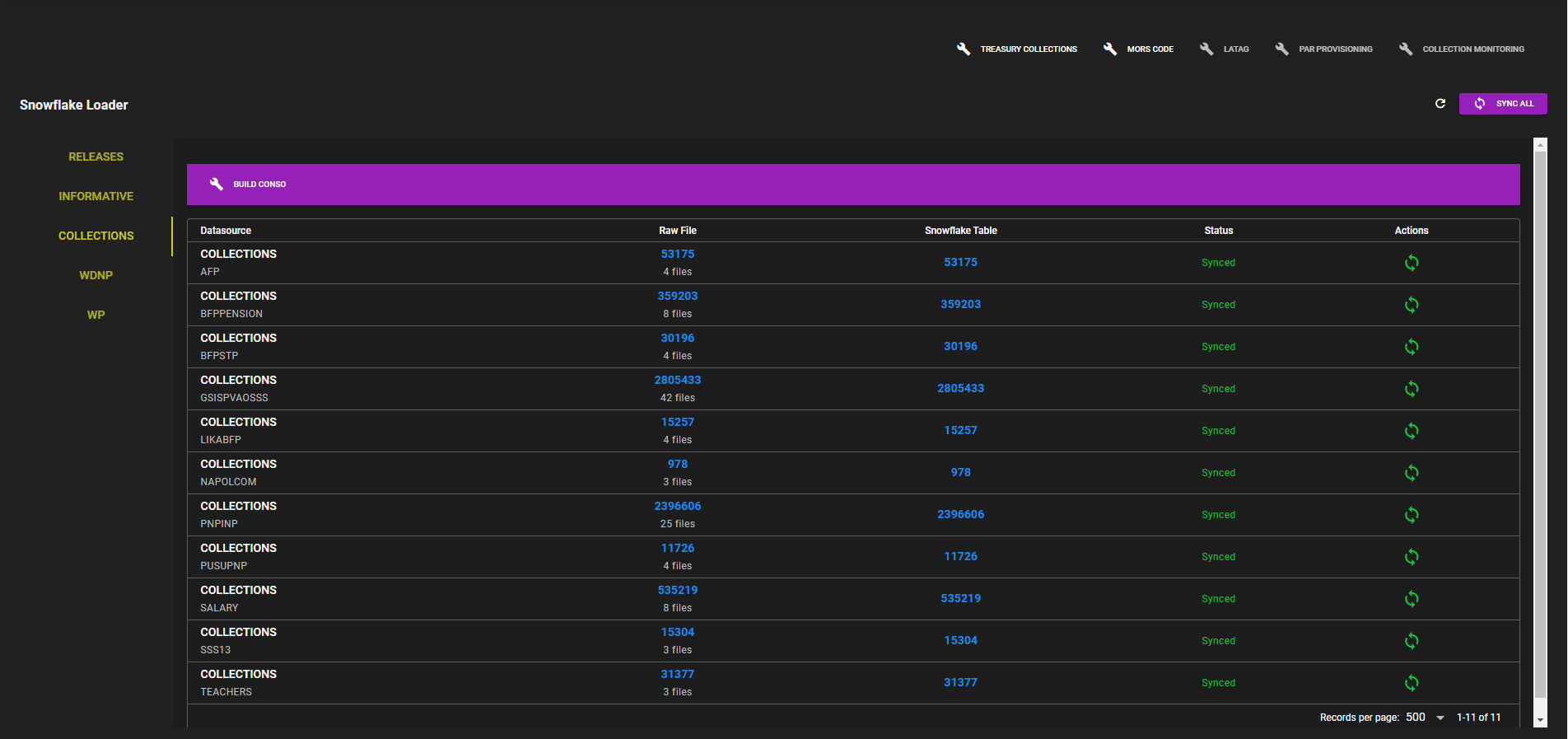

5. Data Synchronization into Snowflake:

- Transformed data is synchronized into Snowflake, our designated cloud-based data warehousing platform. The synchronization process ensures that the data is efficiently and securely loaded into Snowflake for further analysis. Data in Snowflake is now ready for use in analytics and reporting.

¶ Data Cleaning and Transformation Standards

- CSV File is needed, convert files extracted into CSV file format.

- Segregate Files Extracted into Products, Put COLLECTIONS_PRODUCTNAME_DATE OR RELEASES_PRODUCTNAME_DATE

- check for ', " symbols as this may crash Data Loader Tool.

- Correct Product name for each file as incorrect loading into other table may Ruin tables already created.

¶ ETL (Extract, Transform, Load) [Best Practices] Procedures

- Data Loader (Why)

¶ Data Quality Assurance and Validation

1. Checking Extraction if Complete:

- Regularly validate data extraction processes to ensure completeness and accuracy. Implement monitoring mechanisms to detect and address any extraction issues promptly. Check Data Loader Monitoring for any Missing Files.

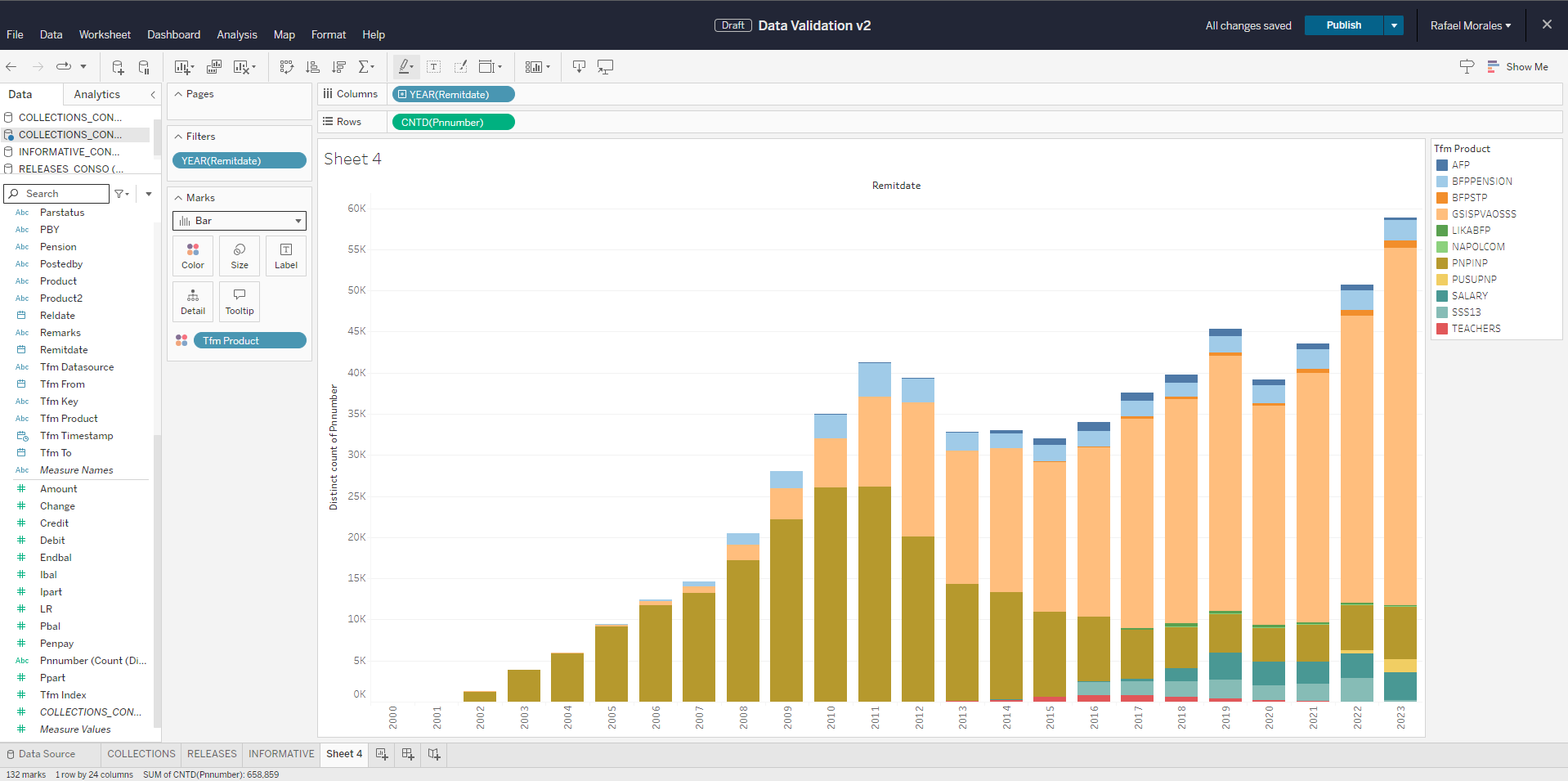

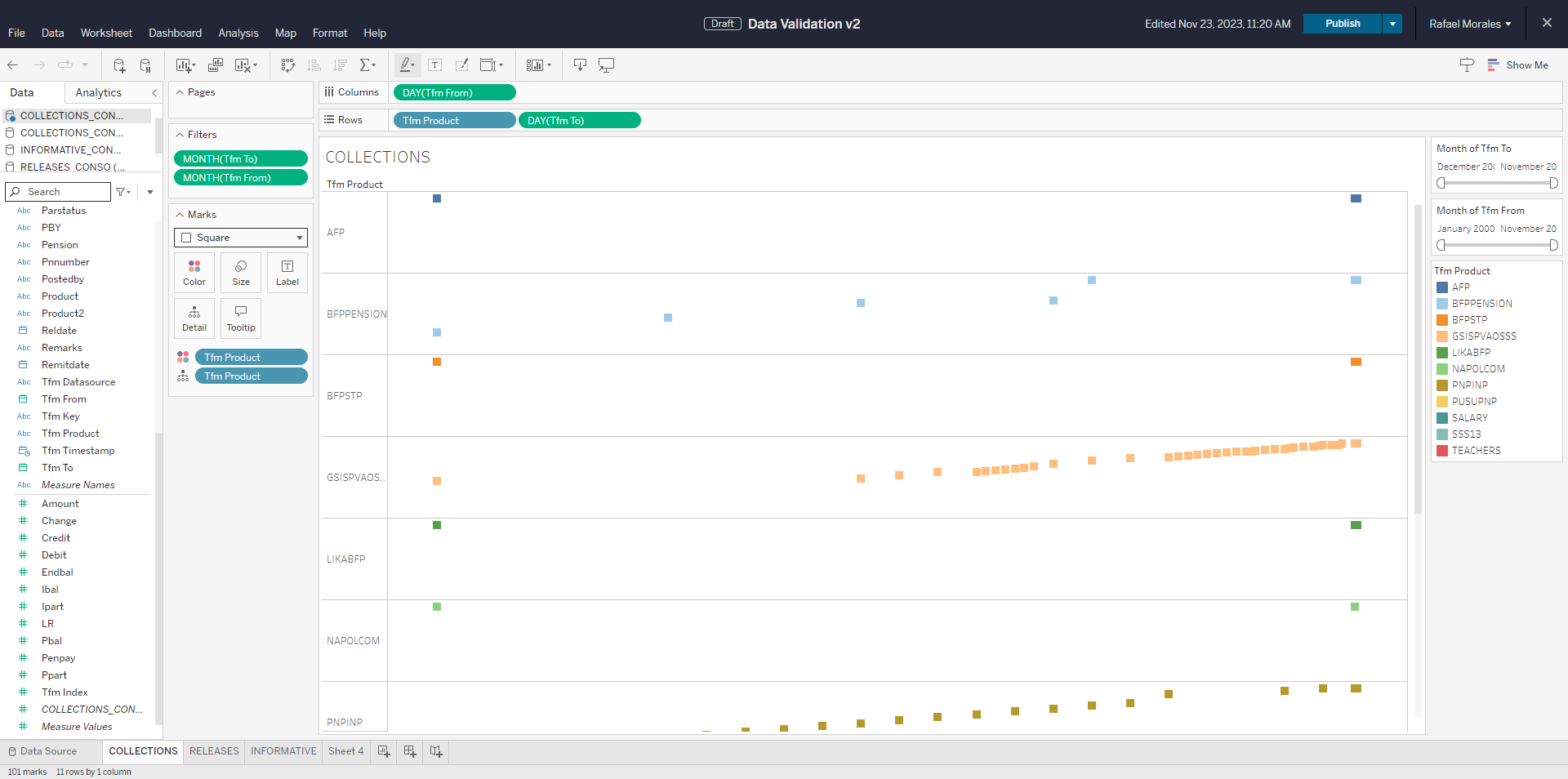

2. Tableau Validation:

- Utilize Tableau for comprehensive data validation through visualization. Leverage Tableau's visualization capabilities to identify errors or anomalies in dates and products. Implement custom dashboards and visualizations that highlight discrepancies, facilitating quick and targeted error resolution. Regularly review Tableau visualizations to ensure that data discrepancies are promptly addressed.

- Visualization shows segration of Products Per Year.

- Validates Data Uploaded has no missing dates

¶ Security Measure for Data Warehouse

¶ Data Access and Permissions

Each Member of the DTT team has their own Snowflake account, which allows access into the Data warehouse if they ever need to Connect, Create reports or Query a table.

¶ Backup and Recovery Procedures

- Each file loaded and synced into Snowflake is saved into Synology Drive for Backup.